Six levels of 3D printer security against 3D-printed guns

RECODE.AM #35

Last Friday I described the background of another drama related to 3D-printed firearms going on in the United States. In my opinion, this is a strictly political topic, quite detached from everyday reality.

However, I did mention, that if one actually wanted to carry this out “properly,” it would involve significant restrictions on 3D printer users and on their privacy.

I therefore decided to take a closer look at this issue and lay out all the possible levels of blocking the 3D printing of firearms at the software level.

I focused on individual users and desktop FFF technology, because according to the vision of the authorities, it is primarily individual users who pose the greatest threat to the health and safety of law-abiding citizens - not large companies and corporations.

So this article is about how to stop the average Joe from 3D printing guns, not a BlackRock portfolio company.

Level Zero - ridiculous

The simplest control mechanism could operate at a purely administrative and symbolic level. The printer software or slicer could analyze file names, folder names, and project metadata.

Models explicitly named “gun,” “rifle,” “frame,” “receiver,” or containing well-known firearm designations could be automatically blocked or flagged as suspicious.

Such a solution would be easy to implement and virtually cost-free in terms of computation, but at the same time completely ineffective against users who are not imbeciles.

Level One - obvious

The most obvious and effective method is analyzing the file structure before printing even begins. The software could scan models for known geometric features characteristic of firearm components.

In practice, this would mean comparing the solid model against a database of patterns: trigger housings, slide rails, barrel holes, or the characteristic shapes of lower receivers.

Such a system would work similarly to signature-based antivirus software - effectively blocking known models, but vulnerable to any modification of geometry, scaling, fragmenting the model, or printing parts separately.

The weakness of this model is, of course, the need for constant updates of the model database. And dealing with the cleverness of users, who could, for example, add extra solids to the model to disrupt the geometry (and then remove those solids manually afterward, much like supports).

Level Two - advanced

A more advanced solution would interfere with the print preparation process itself. The slicer could analyze not only the final geometry, but also the functionality resulting from wall thickness, layer orientation, material choice, and predicted stresses.

If the algorithm determined that a given object, once printed, could withstand loads typical of firearm components, the print would be stopped.

This approach would require physical modeling and simulation, significantly lengthening the print preparation process.

It would also generate false positives in the case of legal machine parts, tools, or mechanical components with similar strength characteristics.

Level Three - AI-based, a.k.a. Minority Report

In the age of ubiquitous AI, the use of machine-learning algorithms would be obvious. Neural networks trained on massive datasets containing both legal technical models and known firearm designs could recognize an object’s function based solely on geometry, rather than simple signatures.

Such a system could identify “design intent,” even if the model were deliberately distorted, split into parts, or hidden within a larger assembly.

In theory, this would offer the highest effectiveness, but also the greatest risk of abuse and error.

An algorithm is never one hundred percent certain, and the decision to stop a print would be based on statistical probability rather than unequivocal proof.

This is a vision straight out of a Philip K. Dick story and its later film adaptation starring Tom Cruise… The 3D printer will guess what you intended to do and take appropriate action (for example, sending an email to the authorities that it feels concerned about the owner’s activity).

Level Four - 1984

The software could operate not only before printing, but also during the print itself. Analysis of images from a camera monitoring the build plate, data from vibration sensors, or characteristics of extruder operation could reveal that the emerging object matches known shapes of firearm components.

The printer could automatically stop the process before the object reached a usable form.

Such a solution would resemble DRM systems known from inkjet printers, where the device itself decides what it is allowed to print. In a home environment, this would mean constant monitoring of the device’s operation.

Everything would be cloud-based. The software could require sending the model to an external server for verification before printing. A central database would be continuously updated with new firearm designs and techniques for disguising them.

From the standpoint of effectiveness, this would be the most flexible solution, but also the most invasive. The user would lose control over who analyzes their private projects, and the printer itself would cease to be an offline tool operating solely within the home network.

George Orwell could not have described it better.

The final level

A fully comprehensive system for blocking the printing of illegal firearm parts would therefore have to be a hybrid system, combining simple rules, geometric analysis, machine learning, and monitoring of the printing process.

At the same time, it would have to confront a fundamental paradox: the more technically effective it is, the more it interferes with the user’s privacy and autonomy.

And here we probably get to the heart of the matter… Because software that blocks specific 3D models is one thing, but at the end of the day it is about verifying what the user is printing and whether they are not, by chance, printing something that is - at that moment - illegal.

Because, you know, there is a much simpler solution:

every 3D printer, when printing a part, leaves a unique and unforgeable “watermark” on the print; this topic has been discussed many times over the years; technically it is much easier to implement than points 2-4

every 3D printer is registered like a car (or a gun); its watermark is assigned to the user - the printer’s owner.

From the authorities’ point of view - wouldn’t that be wonderful?

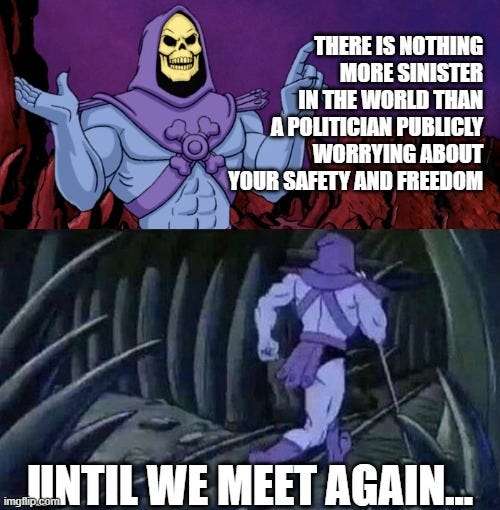

I’ll leave you with that thought... Skeletor will return with more reflections next week...